Customizing Explainable AI (XAI) and adding trust-as-a-service to your product design

Let your black box predictions be interactive and part of the customer experience

According to an IBM Institute for Business Value survey, 68 percent of business leaders believe that customers will demand more explainability from AI in the next three years. Source

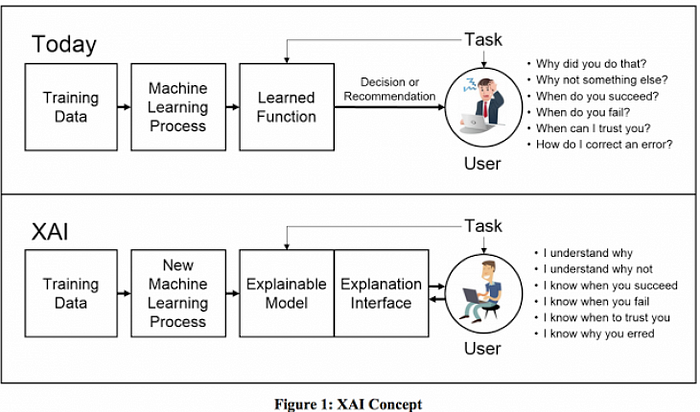

There is no question whether explainable AI is necessary or not. The question is how it is going to be used and who is going to interact with it. I have prepared a very simple framework with the intention to build better explainable AI frameworks.

Let’s start with mapping out the basics about your product and your customers.

- What are the characteristics of your product? What is the main value prop?

- Define what are the inputs for the algorithm and how that algorithm works

- What data is needed to train this algorithm?

- Define what are the regulations of the space in which the prediction will be applied and make it part of the explanation

- Why do you need to know the reason for the prediction? How are you going to use it? How is your business going to profit from the explanation?

- What drives your users? What do they want to learn from this prediction? How are they going to use this knowledge?

- How are you going to use the outcome and user choices to improve the algorithm?

After answering the above questions let’s start with the usability of that explanation.

- Define how and in what context the explanation is going to appear within your product? How does it work together with your product/ organization design principles?

- Define main stakeholders that are going to interact with the prediction and how they might use it

- Define where in the user journey it is going to appear and what are the next steps the user can take after learning about the prediction. What does the user need to know to make the next decision?

- How detailed it should be? What it should display, what format it should have? Can it be discovered on different levels, how the user should interact with its content? How it is going to help with achieving the task success metrics?

- What do you want to do around that prediction? For example, you want to set up a benchmark for that prediction and alter the algorithm, maybe you want to customize the prediction?

There will be 4 main stakeholders that will most likely interact with that prediction and make the decisions based on it:

- Data scientist/ programmer | Why? To improve performance

A data scientist would be interested in knowing how the model could be communicated with the model receiver and interpreter. During production time data scientist will most likely need to communicate it to the 1) managers for review before deployment, 2) a lending expert to compare the model to the expert’s knowledge, or 3) a regulator to check for compliance. He/She wants to understand the model as a whole and how it could be improved by mixing different feature weights and learning about different outcomes.

Programmer working in the field of autonomous cars would like to know why a system takes certain decisions. What path does a system follow to arrive at a specific result? Understand this can help developer to design better algorithms.

- Consumer | Why? Understand the factors

The consumer would like to understand the results. Am I being treated fairly? What can I do change to receive better results? What other people see and how the choice that I make will affect me?

- Regulator/ Legislator | Why? To ensure fairness

The regulator would like to learn about the model and datasets used to train this model. Does the model make fair decisions? Is it transparent, is it biased? Does it follow the data protection regulations? Is the explanation comprehensive to the customer?

- Businesses, end-user decision-makers | Why? Confidence, trust

How my product supports the explanation mechanism and allows users to interact with it? How my business metrics depend on the explainable black box ai? How my business value prop corresponds with how transparent we are to the customers? How do we want to empower our users and make our product discoverable thanks to explainable AI?

Another aspect of why explainable AI might be valuable to the businesses is the changing landscape of how using AI is going to be regulated in the near future. Having XAI can help you with sticking to compliance against industry regulations.

Let’s now focus on the practical application of the XAI and how it could be architected

Example from IBM

IBM has created AI Explainability 360 Open Source Toolkit so we can take a look at their approach. This toolkit is directed towards people creating the XAI and learning about how to implement it into their product. The toolkit also serves an educational purpose.

1st step

Explain what is the use case

2nd step

Choose a consumer type

3rd step

What this customer wants to know and why it is important in that specific use case.

4th step

Customer can learn which features of the application fall outside the acceptable range and which features are having the most impact on the application score.

Product design implications of XAI

- Adding value at each disclosure level

With explanation, we should take some measures in terms of how the information is presented to the user. Since there might be a lot of information to handle at glance diving the content at a progressive disclosure presented by SAP (image above) might be a great solution. If the user is interested to learn more he can always dive deeper and learn more but we focus on showing the most relevant information at a first glance.

- Strength and weakness of the prediction

- Showing levels of fairness, robustness, explainability and lineage and provide necessary insights to fully understand each of the features of the AI model

- How this algorithm will behave in a future

- Ability to trace decisions that lead to the best outcomes

- Play with the prediction by manipulating the features and type of the explonation

- Asking more complex questions and receiving in-detail analysis

- Controlling the quality and mark areas of improvement

- Depending on your criteria of the risk, you can see what contributes to the increase of risk or decrease

- Depending on your KPI’s you can see what contributes to it and how to be on track to reach your goals

Customer-facing solution ideas

In my previous article titled ➡️ AI experience manager for the network of intelligent ecosystems ⬅️ I have shown an example where the user is able to interact with the prediction in order to arrive at a decision. The XAI could provide the user with enough information to help the user make the right decision or answer relevant questions that would help the user in making the decision. It plays a supportive role but it doesn’t create any specific bias.

- A form of quiz where the user would need to answer a set of relevant questions, providing a model with more information needed in order to make a prediction

- Depending on the desired outcome you can get a set of data to support your decision

- Suggesting solutions and presenting possible different versions of the reality both short-term and long-term affected by the decisions you might take

- XAI could advise you which decision to be taken is in the best interest of the society, individual, planet or communities level

- Explanations could be categorized to provide you with the insights that are already filtered and customized for easy information digestion

- Select a set of filters for your explanations to arrive at the right format

- Choose a form of explanation, decide on the preferred visual format

- Ask for the comparison with your peers. How your community would choose? You can choose whether you want to be biased and choose what your peers are choosing

- Setting weight to various features that might be affecting your credit score, health score etc. XAI could collaborate with your DID to provide you with a form of transparency on how the choices you make are affecting you in different areas of life. Some aspects might be more important for a specific area of your life and some might be less important.

- The prediction could contain a warning and how certain decisions can put the user into a risk zone and outline which aspects are contributing to that risk. It could highlight which actions could lead to a change from a risk zone to a no-risk zone.

- Choose features that you would like to be highlighted when given predictions

- Provide a path to the user about how the system arrived at a specific prediction but show at a progressive disclosure level if the user wants to learn more and perhaps add some mara data if doesn't agree with how the prediction was made

- Provide an option to the user to dispute about the prediction being made

- User should be able to set the features of how the predictions should appear on their device, in which modes it should appear and in which format — a notification, within an app only, on the locked screen?

AI patterns that could help with better UX of the XAI

- Algorithm Effectiveness Rating. Informing the user about the accuracy rate of the prediction before giving the result

- Setting Expectations & Acknowledging Limitations. Guiding the user around how the prediction should be used. That for example it shouldn’t be relied upon and followed in 100%. It sets the expectations and what is the quality of the result given.

More to explore: https://smarterpatterns.com/patterns

If you are interested in learning more about my research please check out two of my latest publications:

How quantum computing will affect Generation Z?

and

Don’t hesitate to get in touch via email, or👇